What Is the Typical Budget for an AI MVP?

Typical AI MVP budget ranges, hidden cost drivers, token math, and a scope-first plan to ship fast without overspending.

Intro

If you are asking “what is the typical budget for an AI MVP,” you are usually trying to avoid two bad outcomes:

- Spending months and a scary amount of money before you learn anything real.

- Shipping a demo that looks like AI, but breaks the moment real users touch it.

This guide gives you a practical answer: budget ranges that match real scope, the AI-specific cost drivers that inflate projects, and a scope-first framework you can use to decide what to build now vs later.

If you want the fastest way to sanity-check scope, start with the scope-first MVP Cost Estimator. It is designed to show what is driving complexity and what to cut first.

Key takeaways

- “AI MVP budget” is two budgets: one-time build and ongoing run (your token bill, monitoring, and iteration).

- Most AI MVPs get expensive because teams try to solve reliability (evaluation, guardrails, edge cases) too early.

- The cheapest AI MVPs are usually workflow tools: AI drafts, classifies, summarizes, routes, or extracts, and a human approves.

- Fine-tuning is rarely step one. Most MVPs should start with API + good prompts + retrieval (if needed).

- You can build a useful AI MVP on a fixed budget, but only if you define one core loop and a ruthless cut list.

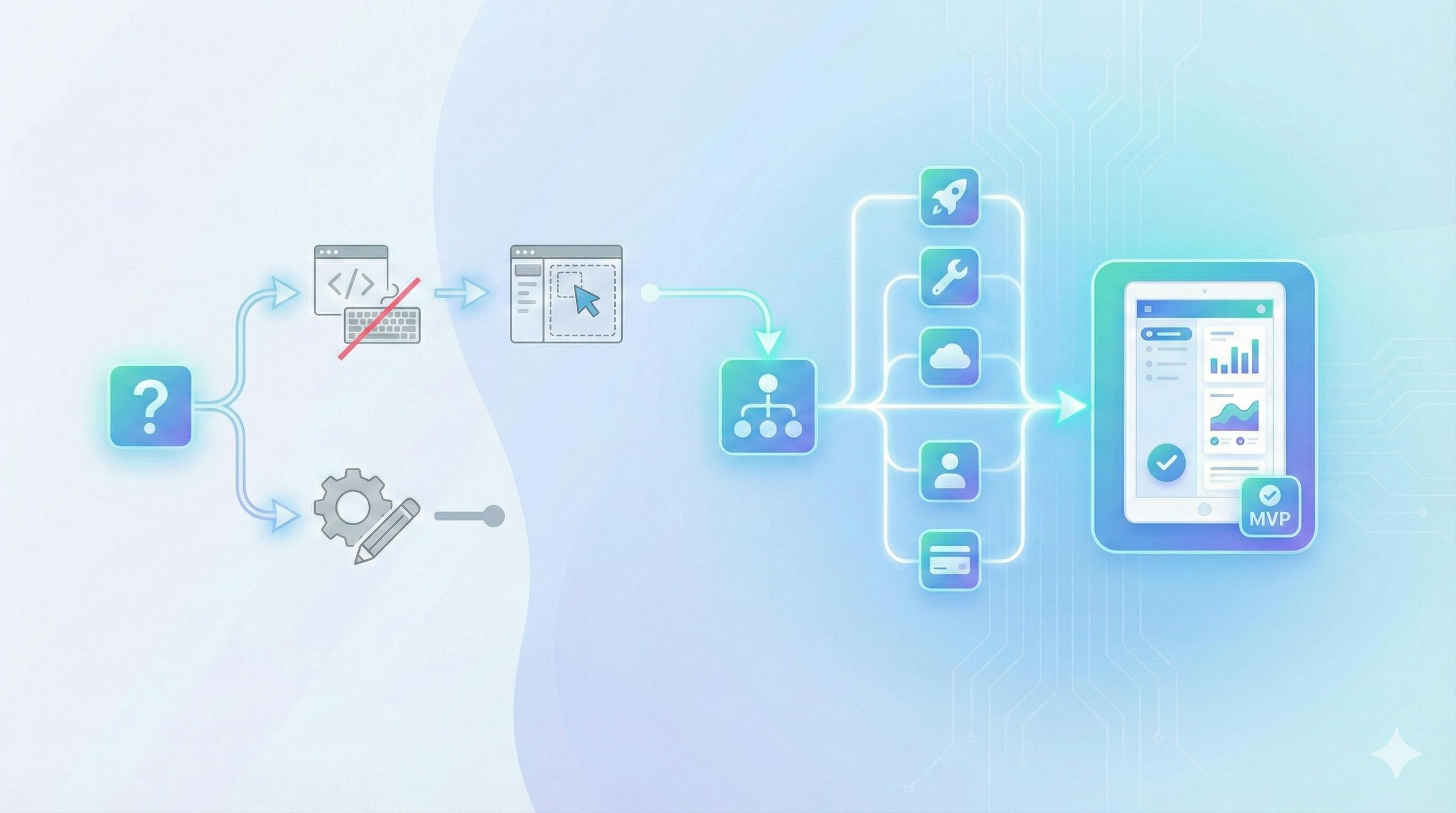

Start with scope, not a number

A “typical budget” is not a single number because an “AI MVP” can mean anything from:

- A simple web app that sends user text to a model and shows a result, to

- A multi-tenant SaaS with roles, billing, retrieval, audit logs, evaluation, and compliance requirements.

If you skip scope, you will get wildly different quotes, and none of them will feel trustworthy.

The two budgets you must separate: build vs run

Build budget (one-time): design, development, QA, deployment, and a first iteration plan.

Run budget (monthly): model inference (tokens), hosting, logs, monitoring, and ongoing changes as you learn.

The big mistake: people plan the build budget and ignore the run budget until the first invoice.

The scope levers that move cost fastest

If you want to reduce AI MVP cost quickly, focus on these levers first:

- Number of user roles and permissions

- Integrations (Slack, Gmail, CRMs, payment providers, internal systems)

- Data sources (uploading docs, connecting databases, scraping, syncing)

- Reliability level (best-effort assistant vs “must be correct” system)

- Human-in-the-loop vs fully automated decisions

If you want a structured way to score these, run the MVP Cost Estimator and treat the result as a complexity signal, not a promise.

Typical AI MVP budget ranges (and what fits each)

Most public “AI app cost” content clusters into tiered ranges.

Here is a more useful version: budget ranges mapped to what you can realistically ship.

Range A: $0 to $3,000, Prototype and validation

What it is: proof of demand, not a production system.

What you build:

- Landing page + waitlist

- Clickable prototype

- A manual “concierge” service where AI assists you behind the scenes

Why it works: you learn messaging, positioning, and willingness to pay before engineering becomes the bottleneck.

When it fails: when you pretend a prototype is a product and users depend on it.

If you are non-technical, you will usually get more leverage from a decision framework than another tool list. Use the build-without-coding decision tree to choose the right path without overcommitting.

Range B: $5,000 to $15,000, AI MVP v1 that ships a real workflow

What it is: a real product with one core loop, not an “AI demo gallery.”

Typical scope that fits:

- One platform (usually web)

- 1–2 roles (admin + user)

- One primary workflow (submit input → AI output → user action)

- Basic admin to manage users and content

- A clear limitation: “AI drafts, human approves”

Best fit use cases:

- Content drafting with approvals

- Customer support draft replies

- Lead qualification summaries

- Document extraction into structured fields

- Internal “ops copilot” for a narrow process

This is the budget zone where fixed-scope packages can work well, because you are forced to ship a small core loop instead of boiling the ocean.

Range C: $15,000 to $50,000, AI product v1.5 (reliability + multi-workflow)

What it is: you are still an MVP, but you are paying for the things that make AI usable in production.

What increases cost here:

- Retrieval (RAG) across company docs

- Multi-step workflows (agents, tool calls, background jobs)

- Better evaluation and regression testing

- More roles, more permissions, more edge cases

- Monitoring, analytics, and audit logs

This is often where founders realize “AI MVP” was shorthand for “AI + product + reliability + operations.”

Range D: $50,000 to $250,000+, custom model work and high-stakes domains

What it is: custom training, proprietary model pipelines, or high-risk environments where mistakes are expensive.

What usually triggers this range:

- Training or fine-tuning on large proprietary datasets

- Complex multimodal inputs (audio, images, medical scans, etc.)

- Strong compliance needs (auditability, traceability, governance)

- Performance constraints that require self-hosting

If you are here, you are not “just building an MVP.” You are building an AI capability.

The AI cost drivers most teams underestimate

AI MVPs get expensive for different reasons than normal SaaS. The biggest hidden drivers are not “using AI,” they are making AI behave.

Data access and permissions

The moment your AI touches real user or company data, you need:

- Access control

- Data boundaries per user or per workspace

- A plan for what gets stored, and what does not

Even for a simple MVP, data handling decisions create architecture and testing work.

Retrieval (RAG) and knowledge plumbing

A lot of AI MVPs are basically: “Answer questions using our docs.”

That sounds simple, but “docs” implies:

- ingesting files

- chunking and embeddings

- search and ranking

- citations and traceability (even if lightweight)

RAG can be worth it, but it moves you out of “cheap wrapper” territory quickly.

Evaluation and hallucination containment

If your AI output influences decisions, you need to test:

- correctness on representative cases

- consistency over time

- failures when inputs are messy

This is one reason many teams use “human approval” in v1. It is not just safer, it is cheaper.

Safety and security costs are real costs

If you ship anything that accepts user input, you are exposed to issues like prompt injection and data leakage. OWASP’s Top 10 for LLM applications is a good reality check for what you might need to defend against as you move from MVP to production.

You do not need enterprise security in v1, but you do need basic risk awareness so you do not design yourself into a corner.

Latency and UX

Fast AI feels magical. Slow AI feels broken.

If your flow is “user waits 20 seconds,” you will spend time on:

- streaming responses

- better prompts

- caching

- fallbacks and partial results

These are product decisions that become engineering decisions.

Human-in-the-loop

Human approval is not a failure, it is a design strategy.

It lets you ship earlier because:

- the model can be “helpful” before it is “correct”

- you collect real examples of what users accept or reject

- you build a dataset for later improvements

Build vs buy for AI MVPs

“Build vs buy” in AI MVPs usually means: what do you delegate to existing platforms vs what do you own.

API-first vs self-hosted

For most MVPs, API-first wins:

- faster to ship

- easier to change models

- lower infrastructure burden

Self-hosting becomes rational when you have:

- strict data constraints

- cost at scale that beats APIs

- specialized latency requirements

For v1 validation, most teams do not.

Fine-tuning vs RAG vs prompt engineering

A simple rule of thumb:

- Prompt engineering first (cheap, fast)

- RAG next if you need proprietary knowledge

- Fine-tuning later if you have stable tasks and enough data

Fine-tuning is often used too early because it feels like “real AI,” but it rarely fixes product problems.

Third-party tools that reduce build cost (and when they add risk)

Tools can save time, but they also add:

- lock-in risk

- permission and security complexity

- operational overhead

If your MVP needs to be rebuilt later, choose tools that keep you portable:

- own your domain

- own your database

- keep your prompts and evaluation artifacts versioned

Token math: the simplest way to estimate monthly AI spend

Most “AI MVP budget” articles skip the monthly bill, but for many AI products it becomes the real budget story.

A practical formula

Estimate monthly inference cost like this:

Monthly cost ≈ Requests per month × (Avg input tokens × $/token + Avg output tokens × $/token)

Then add buffer for:

- retries

- longer conversations

- peak usage

You can pull current per-token rates from provider pricing pages (example: OpenAI API pricing).

If you use a managed provider like Bedrock, pricing also varies by model and usage type.

Three example monthly budgets (simple, realistic, not perfect)

These are illustrative, not promises.

Example 1: Internal drafting tool

- 2,000 requests/month

- short prompts, short outputs

- result: typically tens of dollars to low hundreds/month depending on model choice and prompt size

Example 2: Customer support assistant with retrieval

- 10,000 requests/month

- longer context, citations, more output

- result: can move into hundreds to a few thousand/month as context grows

Example 3: High-usage consumer AI feature

- 100,000+ requests/month

- long context, personalization

- result: monthly bill becomes a product constraint, you will design UX around cost

The lesson: your UX is your cost model. Long chat threads, huge context, and “always-on” features are not free.

A scope-first budget framework (the one that keeps you honest)

If you want a typical budget that is actually usable, do this before you pick a number.

Step 1: Define the AI core loop in one sentence

Pick one:

- “User submits X, AI produces Y, user approves and sends.”

- “User uploads Z, AI extracts fields, user corrects.”

- “User asks a question, AI answers with citations, user takes action.”

If you cannot write the loop, you cannot budget it.

Step 2: Pick one reliability level for v1

Choose only one:

- Helpful: good enough drafts, human verifies

- Consistent: fewer surprises, still not perfect

- Trusted: strong evaluation, guardrails, auditability

Trying to buy “trusted” on day one is what drives budgets into Range C and D.

If you want baseline MVP budgeting logic first (tiers and timelines), anchor it with this guide: MVP cost tiers and timeline tradeoffs.

Step 3: Write the cut list before you build

A good AI MVP cut list usually includes:

- Multi-tenant teams and invites (defer if possible)

- Multiple integrations (start with one)

- Complex role matrices (start with admin + user)

- “Fully automated actions” (start with approve/confirm)

- Fancy analytics (start with basic logging)

If you do this up front, your budget becomes a scope decision, not a guessing game.

Can you build an AI MVP for $5k?

es, if the AI MVP is the right shape.

A fixed budget forces the right question:

What is the smallest AI-assisted workflow that proves the value?

What fits $5k (realistic)

- Web MVP

- One core loop

- AI as a helper (draft, summarize, extract, classify)

- Human approval in the workflow

- Basic admin and deployment

This matches the intent of a fixed-scope MVP package, where you ship the core loop first and plan iteration after. Fixed-price $5,000 MVP package.

What usually does not fit $5k

- Custom model training or fine-tuning as the centerpiece

- Multiple complex integrations

- High-stakes “must be correct” automation

- Deep multi-tenant permission systems

- Heavy compliance requirements

If you still want a fixed-scope approach, the clean move is: v1 proves the loop, v1.1 improves reliability.

If you want help scoping it to something shippable, start from the service overview: fixed-scope MVP development.

Partner choice for AI MVPs (freelancer vs agency, with AI reality)

AI adds uncertainty. Uncertainty punishes loose ownership.

When a freelancer can work

- very tight scope

- you can product manage actively

- minimal integrations

- you can tolerate some rework

When a small team/agency is safer

- you need design + build + deployment

- you want predictable weekly shipping

- you care about handoff quality and documentation

- you need someone to own the whole pipeline

If you want to reduce partner risk quickly, use a checklist instead of vibes: freelancer vs agency risk checklist.

Conclusion: the “typical budget” is the one that buys learning fastest

A typical AI MVP budget is not about AI. It is about learning speed per dollar.

- If you need proof of demand, keep it cheap, validate first.

- If you need a workflow MVP, ship one core loop with human approval.

- If you need reliability, budget for evaluation and guardrails, not more features.

If you want a scope-first sanity check, run the MVP Cost Estimator and use the cut list to fit your budget.

If you want a fixed, predictable build path, review the fixed-price $5,000 MVP package.

FAQ

What is a realistic budget for an AI MVP in 2026?

Most teams land in tiers: low-cost prototype ($0–$3k), shippable AI MVP ($5k–$15k), reliability-focused MVP ($15k–$50k), and custom model efforts beyond that.

Can I build an AI MVP without hiring an ML engineer?

Often yes. Many MVPs can use an API model plus good prompts and a human-in-the-loop workflow. The ML-heavy work usually shows up when you need custom training or strict reliability.

Is fine-tuning required for most AI MVPs?

Usually no. Start with prompt engineering and retrieval if you need proprietary knowledge. Fine-tuning tends to be a later-stage optimization.

What are the ongoing monthly costs of an AI MVP?

Typically: inference tokens, hosting, logging/monitoring, and iteration. Token costs depend on model choice and usage patterns.

What is the cheapest reliable AI MVP architecture?

A narrow workflow with AI drafting plus human approval, minimal roles, minimal integrations, and strong logging. Add retrieval only if it is essential.

When should I avoid AI in v1?

When your value is not improved by AI, or when correctness requirements are so strict that you will spend your whole budget on reliability before you prove demand.

How long does an AI MVP usually take to build?

For tight scope MVPs, weeks. As scope expands (roles, integrations, retrieval, evaluation), timelines stretch accordingly.

How do I scope an AI MVP to a fixed budget?

Define one core loop, choose a reliability level, write the cut list, then sanity-check complexity with a scope-first estimator.

Related Articles

Build an MVP Without Coding, The Only Decision Tree You Need

Build MVP without coding using one decision tree. Validate, prototype, go no-code, or hire a partner, then launch fast with less waste.

Freelancer vs Agency for an MVP (Risk Checklist)

An MVP is supposed to maximize learning with minimal effort. So your dev partner choice should minimize the risks that block learning: missed deadlines, unclear ownership, broken handoff, or a product you can’t iterate on.

How Much Does an MVP Cost? Budgets, Tiers, and Timeline Tradeoffs

If you’re asking “how much does an MVP cost?”, you’re usually trying to answer a more important question: How much will it cost to learn whether this product should exist?